When Scaling Bites Back: A $200/Day Lesson in Execution

How I bled money crossing spreads like an ape — and what I built to fix it

I’ve been scaling the allocation to a strategy on a particular market that is fairly new… and by doing so, I’ve hit a wall. That wall being the good ol’ market capacity.

All was fun and games when I was trading a small portfolio, purely focused on high capacity, low frequency models.

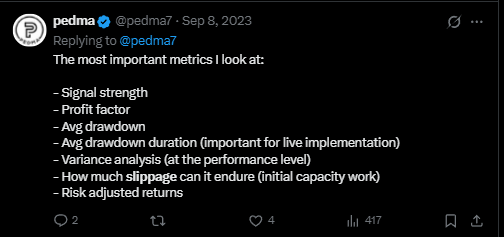

So this issue is relatively new, at least in practical terms. Obviously I’ve spent a lot of time on this issue over the years, we also talk about it almost every chance we get… We’ve ALWAYS factored in costs and slippage, even in the most naive of simulations. Here’s a tweet I just randomly found from 2023 talking about it. I can go back to 2020, and even before, as far as I remember, I’ve always considered costs.

But tbh, I never REALLY had faced them. I didn’t have enough capital for it to be a real issue. So knowing about it theoretically, and having to solve for it, is a completely different ball game.

Today we have a clear example of why we say that backtests are mere sanity tests… The more I scale into new strategies, and new markets, the more I find that to be true.

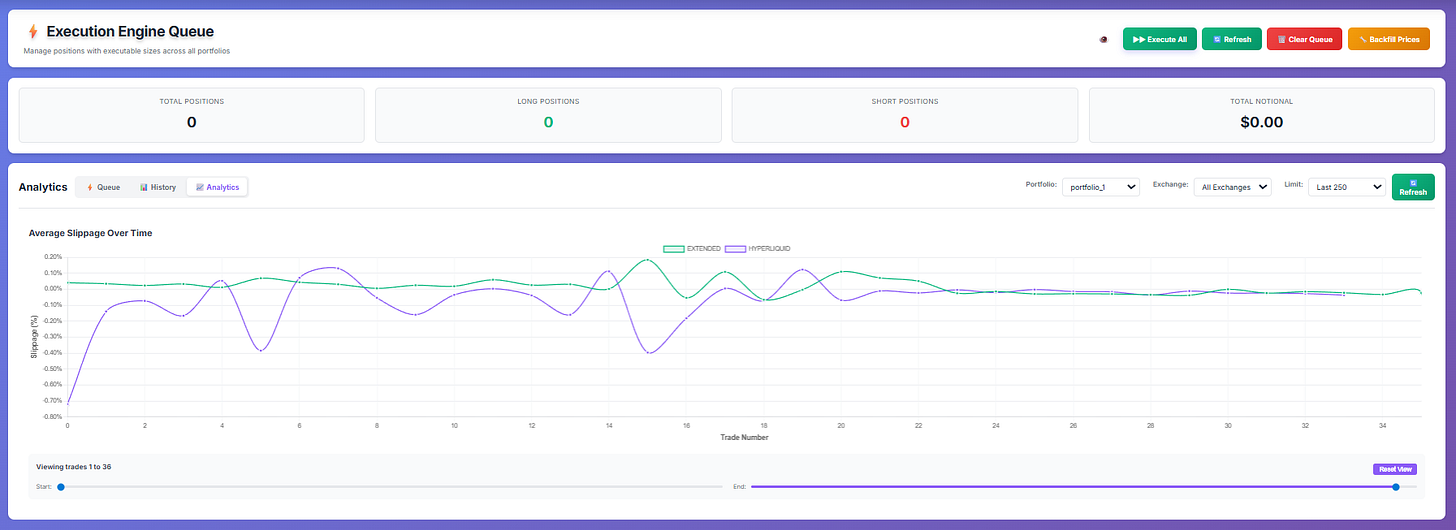

Also this is something that is not just theoretical nonsense that adds no PnL. Saving on costs, is one of the most important things you can do in your own trading. To give you an example, from the past week alone, I am probably saving between $200-$400 A DAY in execution costs on this particular strategy.

You might look at that dashboard and say:

“Did you really paid 40bps to trade? Are you dumb?”

Well… a bit, but allow me to give you a bit of context where it all started and why I let it run for longer than I should.

There’s this new market that I won’t name, but that has pretty restricted capacity. I didn’t expect to be paid a lot to trade there tbh with you, I just wanted an allocation, to be able to farm future reward programs. That’s it. That was the whole purpose.

In crypto there’s these things we call “metas”, or traditionally known as trends. Basically after Hyperliquid TGE’d, a bunch of people got rich from its airdrop. There was nothing else to talk about other than Hyperliquid for months.

That captures a lot of eyeballs… and with that much attention a lot of teams saw opportunity. So they either began developing or the ones that were already developing competing protocols, adopted similar rewards structures to what Hyperliquid did.

So I began betting on all of the most prominent new markets/projects by being very early with my exposures. Let me give you a few examples:

I was early to Hyperliquid, got a decent airdrop from it.

I was early to Lighter, barely did anything there, got a $10k airdrop from it.

I am early to Extended, having more than 11k points at this moment, estimations of valuation fall between $30k-$50k. I even wrote this article in March.

I am farming Variational.

and others…

This is one of the things I’ve been doing to add a “cherry” on top of my systems. Just being early to the next new thing with potential. Obviously there’s added counter-party risks here, but that’s a topic for another day.

So the same applied to this new market. I wanted to be early, had a few heuristics on a model that seemed fairly decent to deploy, and went ahead with it. I didn’t have a backtest for it, no idea if it would work out, but I see that as a plus. Whenever something is weird and hard to estimate for, so it is for my competitors. And I don’t shy away from taking risk.

But that often comes with problems... When you’re in unknown territory, you will run into issues. Mine was execution.

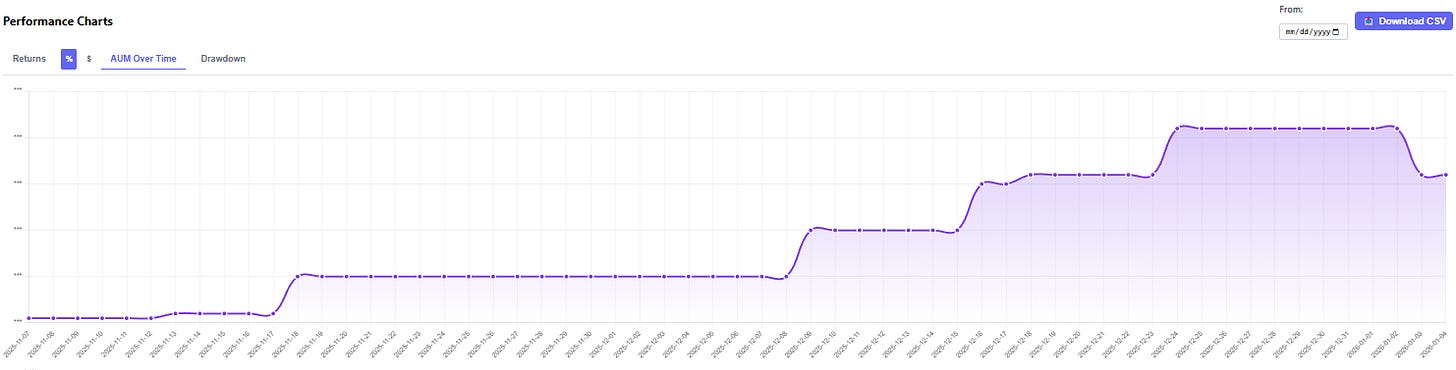

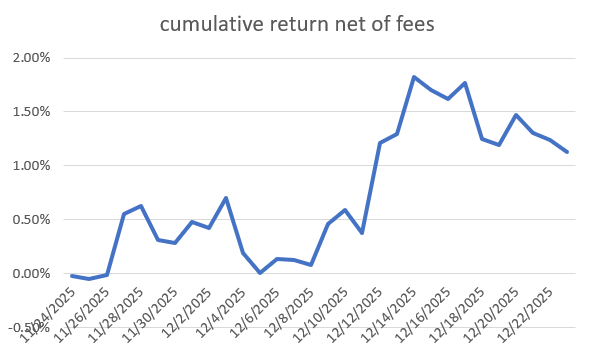

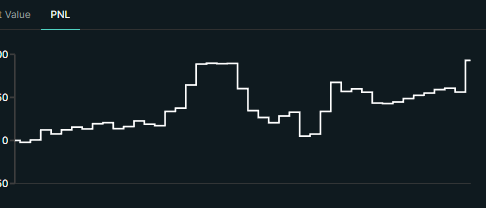

A few weeks pass, and the model is making consistent, decent returns. Best of all, uncorrelated to the market.

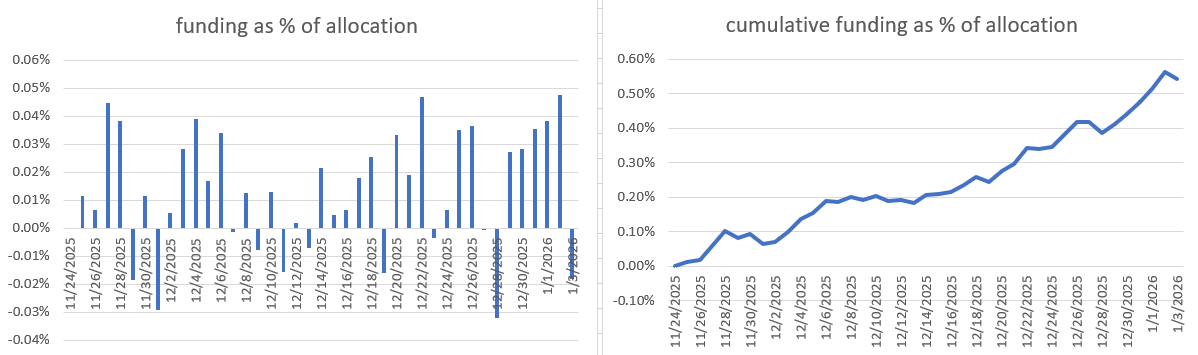

(If you’re wondering why the excel and the exchange’s equity curve is different, is because of sizing. As the model started performing well, I began sizing up, and that causes the % returns to be different than the $ returns. Just wanted to point that out.)

The funding I was collecting, was also fairly consistent across time. (just by this comment you probably can have an idea of what I was doing eheh)

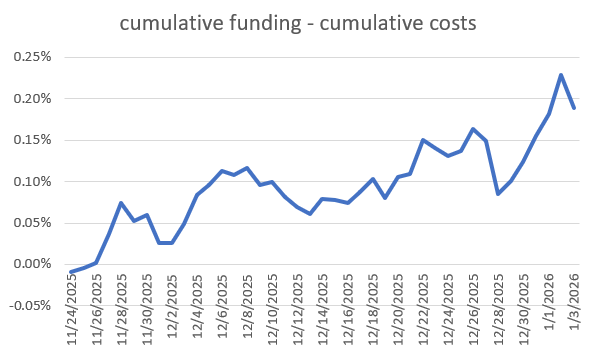

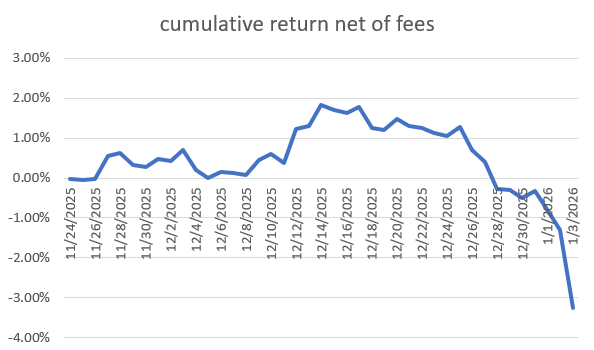

If we take a look at the cumulative funding, net of the cumulative costs, we can see that it was doing pretty well, even though the costs weren’t even close to being as efficient as I wanted them to be. I’ll talk about it later in the post.

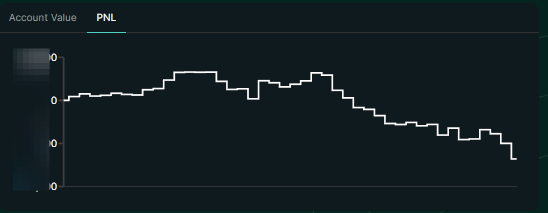

As it became a decent contributor of PnL to my portfolio, I went ahead and scaled size. But I did not do it properly. I did it like an ape. I increased the allocation to that strategy to over 6 figures over night.

As I should’ve expected, over the next few weeks this happened…

Well.. It isn’t a big loss. Right now sits at -3% of the total allocation to that particular strategy, which is just a portion of the portfolio. But it shouldn’t have taken me this long to realize what was going on….

I was getting extremely bad execution. As I dug through all the records, which to be fair, have been pretty poor in this new infrastructure that I’ve been building over the last 3 months, I noticed that it was due to a few things:

Adding more size than that market’s capacity allowed (obviously).

As more markets were added, I added them to the pool of tradable assets, once again, completely disregarding capacity.

Crossing the spread with no controls.

Being too careless about margin requirements.

So enough is enough, I said, something had to be done…

As a side note, I’ve been trying to set time to strictly focus on signal/feature research efficiency. One of my priorities for this year, is to make the existent models more robust and also deploy new ones. But it seems that always something comes up. Hopefully after these last few days building the tools to be a bit smarter about trade execution, we can move on to better things.

Let’s analyze the costs I incurred in trading this new market. I’ve exported all of my data and will go through it bit by bit, and then we will go over what has been implemented, with this new learned experience. I can make mistakes, I make them often, as you can see, but I don’t accept making those same mistakes twice. That is not acceptable.

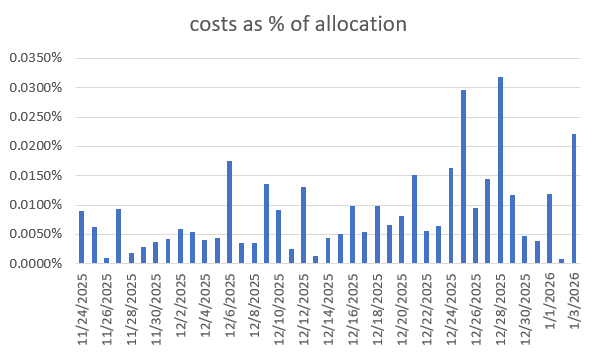

One interesting metric is the costs as a function of the allocation. We can see that it began began increasing a lot when we moved our size up, on the 24th of November.

However, now a question comes up:

But wait a second, how are the costs as a function of capital, scaling? Are you trading more often? Is the daily turnover higher now than it was before?

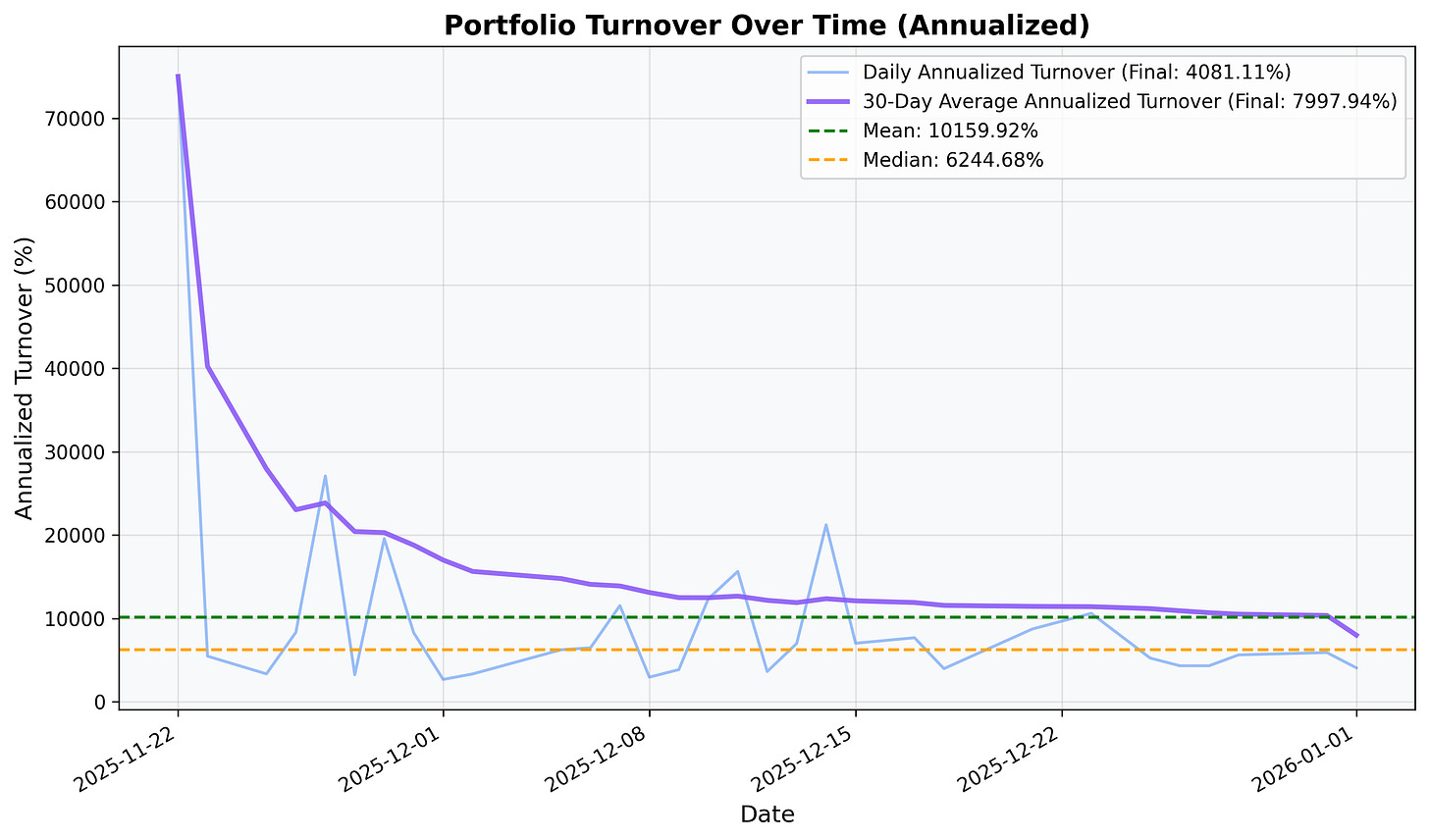

Well, yes and no. I should be having a similar amount of turnover as it was before, because nothing fundamentally changed about the strategy. But don’t take my word for it. My intuition could be wrong. Let’s take a look at the modeled turnover over time.

We can see that despite being an absurd amount, which we’ll get into later, it’s pretty stable, according to my estimates.

I think that the increase in costs comes from the fact that I was having way more “problems” than before. Now I was getting picked off, like this morning. I got liquidated at 1 am. I can’t show the chart without disclosing my wallet or the market I am trading, since it was pretty illiquid at the time. But it was becoming a regular occurrence being liquidated on a specific pair, for things external to my system, like someone manipulating price overnight. Real world trading type things, innit?